Power Center lets you control commit and roll back transactions based on a set of rows that pass through a Transaction Control transformation. A transaction is the set of rows bound by commit or roll back rows. You can define a transaction based on a varying number of input rows. You might want to define transactions based on a group of rows ordered on a common key, such as employee ID or order entry date.

In Power Center, you define transaction control at the following levels:

Properties Tab

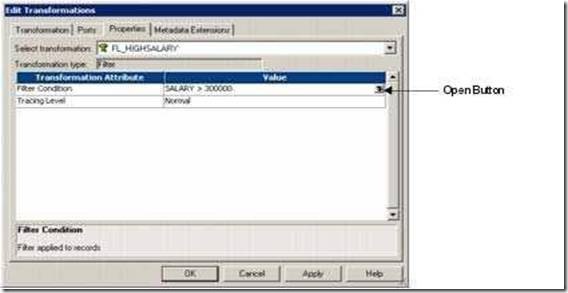

On the Properties tab, you can configure the following properties:

IIF (condition, value1, value2)

The expression contains values that represent actions the Integration Service performs based on the return value of the condition. The Integration Service evaluates the condition on a row-by-row basis. The return value determines whether the Integration Service commits, rolls back, or makes no transaction changes to the row.

When the Integration Service issues a commit or roll back based on the return value of the expression, it begins a new transaction. Use the following built-in variables in the Expression Editor when you create a transaction control expression:

Mapping Guidelines and Validation

Use the following rules and guidelines when you create a mapping with a Transaction Control transformation:

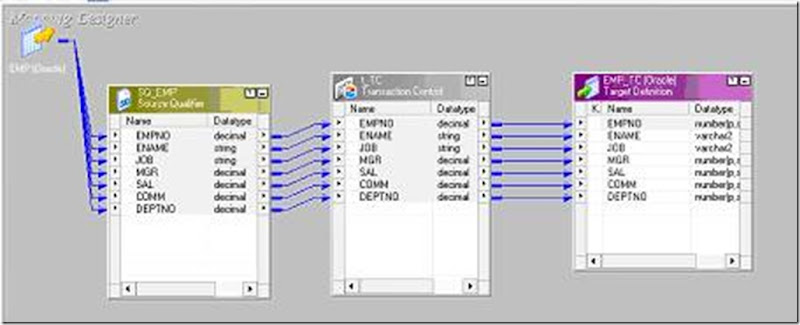

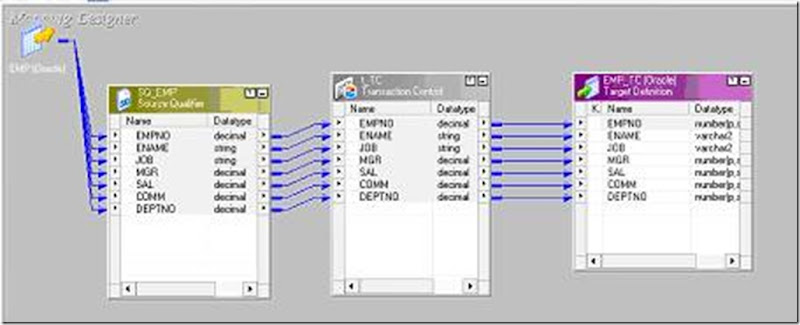

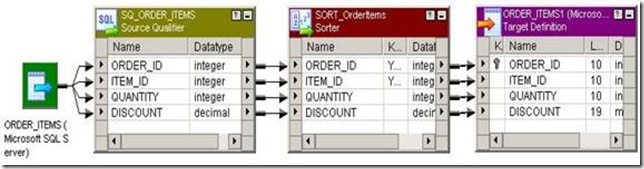

Step 1: Design the mapping.

Step 2: Creating a Transaction Control Transformation.

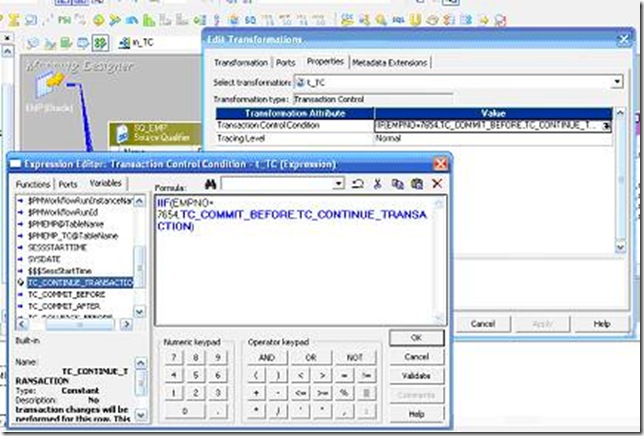

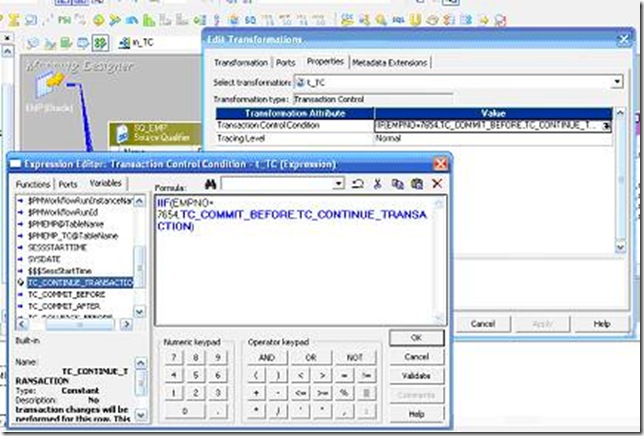

Go to the Properties tab and click on the down arrow to get in to the expression editor window. Later go to the Variables tab and Type IIF(EMpno=7654,) select the below things from the built in functions.

IIF (EMPNO=7654,TC_COMMIT_BEFORE,TC_CONTINUE_TRANSACTION)

Step 4: Preview the output in the target table.

In Power Center, you define transaction control at the following levels:

- Within a mapping. Within a mapping, you use the Transaction Control transformation to define a transaction. You define transactions using an expression in a Transaction Control transformation. Based on the return value of the expression, you can choose to commit, roll back, or continue without any transaction changes.

- Within a session. When you configure a session, you configure it for user-defined commit. You can choose to commit or roll back a transaction if the Integration Service fails to transform or write any row to the target.

Properties Tab

On the Properties tab, you can configure the following properties:

- Transaction control expression

- Tracing level

IIF (condition, value1, value2)

The expression contains values that represent actions the Integration Service performs based on the return value of the condition. The Integration Service evaluates the condition on a row-by-row basis. The return value determines whether the Integration Service commits, rolls back, or makes no transaction changes to the row.

When the Integration Service issues a commit or roll back based on the return value of the expression, it begins a new transaction. Use the following built-in variables in the Expression Editor when you create a transaction control expression:

- TC_CONTINUE_TRANSACTION. The Integration Service does not perform any transaction change for this row. This is the default value of the expression.

- TC_COMMIT_BEFORE. The Integration Service commits the transaction, begins a new transaction, and writes the current row to the target. The current row is in the new transaction.

- TC_COMMIT_AFTER. The Integration Service writes the current row to the target, commits the transaction, and begins a new transaction. The current row is in the committed transaction.

- TC_ROLLBACK_BEFORE. The Integration Service rolls back the current transaction, begins a new transaction, and writes the current row to the target. The current row is in the new transaction.

- TC_ROLLBACK_AFTER. The Integration Service writes the current row to the target, rolls back the transaction, and begins a new transaction. The current row is in the rolled back transaction.

Mapping Guidelines and Validation

Use the following rules and guidelines when you create a mapping with a Transaction Control transformation:

- If the mapping includes an XML target, and you choose to append or create a new document on commit, the input groups must receive data from the same transaction control point.

- Transaction Control transformations connected to any target other than relational, XML, or dynamic MQSeries targets are ineffective for those targets.

- You must connect each target instance to a Transaction Control transformation.

- You can connect multiple targets to a single Transaction Control transformation.

- You can connect only one effective Transaction Control transformation to a target.

- You cannot place a Transaction Control transformation in a pipeline branch that starts with a Sequence Generator transformation.

- If you use a dynamic Lookup transformation and a Transaction Control transformation in the same mapping, a rolled-back transaction might result in unsynchronized target data.

- A Transaction Control transformation may be effective for one target and ineffective for another target. If each target is connected to an effective Transaction Control transformation, the mapping is valid.

- Either all targets or none of the targets in the mapping should be connected to an effective Transaction Control transformation.

Step 1: Design the mapping.

Step 2: Creating a Transaction Control Transformation.

- In the Mapping Designer, click Transformation > Create. Select the Transaction Control transformation.

- Enter a name for the transformation.[ The naming convention for Transaction Control transformations is TC_TransformationName].

- Enter a description for the transformation.

- Click Create.

- Click Done.

- Drag the ports into the transformation.

- Open the Edit Transformations dialog box, and select the Ports tab.

Go to the Properties tab and click on the down arrow to get in to the expression editor window. Later go to the Variables tab and Type IIF(EMpno=7654,) select the below things from the built in functions.

IIF (EMPNO=7654,TC_COMMIT_BEFORE,TC_CONTINUE_TRANSACTION)

- Connect all the columns from the transformation to the target table and save the mapping.

- Select the Metadata Extensions tab. Create or edit metadata extensions for the Transaction Control transformation.

- Click OK.

Step 4: Preview the output in the target table.

![clip_image002[5] clip_image002[5]](http://lh4.ggpht.com/_MbhSjEtmzI8/Ta8k6Vu7C7I/AAAAAAAAAU0/K_LB4gUcA7U/clip_image002%5B5%5D_thumb%5B3%5D.jpg?imgmax=800)