PMCMD:

Use pmcmd commands with operating system scheduling tools like cron, or you can embed pmcmd commands into shell or Perl scripts.

When you run pmcmd in command line mode, you enter connection information such as domain name, Integration Service name, user name and password in each command. For example, to start the workflow “wf_SalesAvg” infolder “SalesEast,” The user, seller3, with the password “jackson” sends the request to start the workflow.

syntax:

pmcmd startworkflow -sv MyIntService -d MyDomain -u seller3 -p jackson -f SalesEast wf_SalesAvg

Command Line Mode:

1. At the command prompt, switch to the directory where the pmcmd executable is located.

By default, the PowerCenter installer installs pmcmd in the \server\bin directory.

2. Enter pmcmd followed by the command name and its required options and arguments:

pmcmd command_name [-option1] argument_1 [-option2] argument_2...

Interactive Mode :

1. At the command prompt, switch to the directory where the pmcmd executable is located. By default, the PowerCenter installer installs pmcmd in the \server\bin directory.

2. At the command prompt, type pmcmd.This starts pmcmd in interactive mode and displays the pmcmd> prompt. You do not have to type pmcmd before each command in interactive mode.

3. Enter connection information for the domain and Integration Service.

For example:

connect -sv MyIntService -d MyDomain -u seller3 -p jackson

4. Type a command and its options and arguments in the following format:command_name [-option1] argument_1 [-option2] argument_2...pmcmd runs the command and displays the prompt again.

5. Type exit to end an interactive session.

For example, the following commands invoke the interactive mode, establish a connection to Integration Service“MyIntService,” and start workflows “wf_SalesAvg” and “wf_SalesTotal” in folder “SalesEast”:

pmcmd

pmcmd> connect -sv MyIntService -d MyDomain -u seller3 -p jackson

pmcmd> setfolder SalesEast

pmcmd> startworkflow wf_SalesAvg

pmcmd> startworkflow wf_SalesTotal

Scripting pmcmd Commands :

For example, the following UNIX shell script checks the status of Integration Service “testService,” and if it is running, gets details for session “s_testSessionTask”:

#!/usr/bin/bash

# Sample pmcmd script

# Check if the service is alive

pmcmd pingservice -sv testService -d testDomain

if [ "$?" != 0 ]; then

# handle error

echo "Could not ping service"

exit

fi

# Get service properties

pmcmd getserviceproperties -sv testService -d testDomain

if [ "$?" != 0 ]; then

# handle error

echo "Could not get service properties"

exit

fi

# Get task details for session task "s_testSessionTask" of workflow

# "wf_test_workflow" in folder "testFolder"

pmcmd gettaskdetails -sv testService -d testDomain -u Administrator -p adminPass -folder testFolder -

workflow wf_test_workflow s_testSessionTask

if [ "$?" != 0 ]; then

# handle error

echo "Could not get details for task s_testSessionTask"

exit

fi

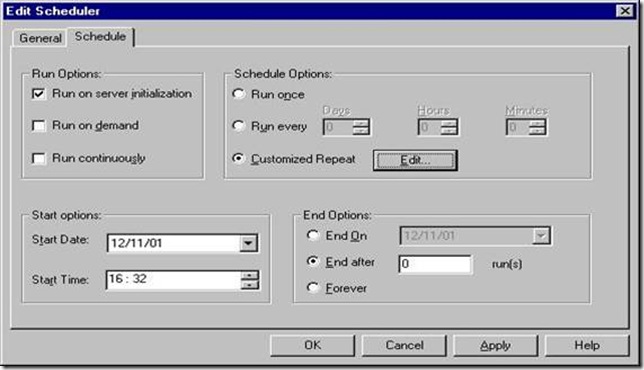

Schedule Workflow

Instruct the Integration Service to schedule a workflow. Use this command to reschedule a workflow that has been removed from the schedule.

The ScheduleWorkflow command uses the following syntax in the command line mode:

pmcmd ScheduleWorkflow

<<-service|-sv> service [<-domain|-d> domain] [<-timeout|-t> timeout]>

<<-user|-u> username|<-uservar|-uv> userEnvVar>

<<-password|-p> password|<-passwordvar|-pv> passwordEnvVar>

[<<-usersecuritydomain|-usd> usersecuritydomain|<-usersecuritydomainvar|-usdv>

userSecuritydomainEnvVar>]

[<-folder|-f> folder]

workflow

![clip_image002[8] clip_image002[8]](http://lh6.ggpht.com/_MbhSjEtmzI8/TanEv61RKwI/AAAAAAAAAHY/33ZowvsU_TQ/clip_image002%5B8%5D_thumb%5B1%5D.jpg?imgmax=800)